API from Scraping Problem: Too Slow! Let's try to cache it.

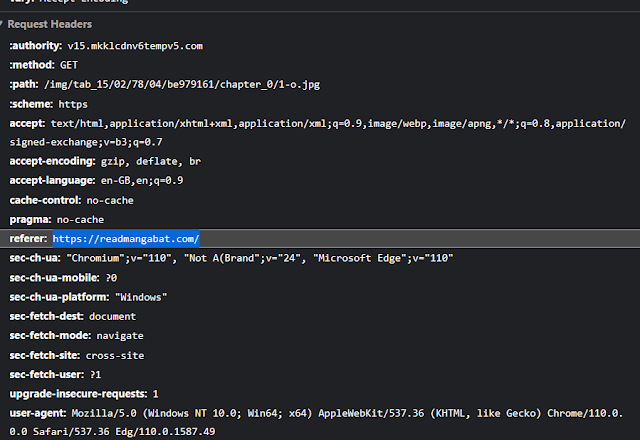

Hi, me again. And yes, it's about that MangaBat unofficial API. After using it for a while, I notice that scraping is too slow for a synchronous request. And imagine if I send the API request 100 times in a minute, I definitely gonna get banned from the source website. So I "cache" the scraped data with an expiration time of 3 hours. If I have to send the API request to RetrieveMangaDetail API or RetrieveChapterPages API in 3 hours, I will re-fetch it and cache it again. Of course, there's a requirement for creating an option in which the user can send a command to re-fetch it manually without having to wait for 3 hours. So, I modified my API to support "caching", by saving the data into the database via Django models. First, create a model to "cache" the manga detail class MangaModel(models.Model): url = models.URLField( primary_key = True ) result = models.JSONField( null = True, default = None ) lastFetchedAt = models.DateTimeField(